Recently I had to implement the scenario that is depicted below.

In more detail I had to implement a way to get user input (usernames) in order to pass this information on an Azure DevOps pipeline and through this pipeline make some actions on Azure through az cli.

For the described solution I used the below services:

- Azure Devops

- Power Automate

- Azure DevOps rest API

- Azure

The first thing that I created was the form. In this form the user has to provide the input of the usernames in a requested format in order to pass this information on the later components.

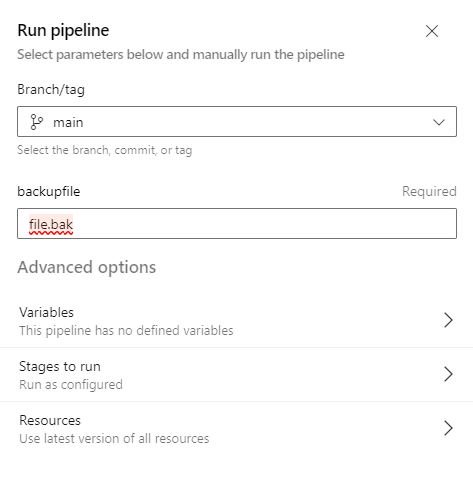

Then I created a new power automate flow that would handle the input of this form and make a POST request on Azure DevOps api in order to trigger a build pipeline with the parameters of the form as input.

The flow and the task that have been used are depicted below.

Select response ID on the form.

On the POST request you should enter your details regarding the pipeline ID, organization and project. The body of the request should be as shown in order to get the parameters parsed correctly.

The azure devops pipeline will have as an input parameter and empty object.

trigger: none

pr: none

pool:

vmImage: windows-latest

parameters:

- name: users

type: object

default: []

jobs:

- job: vdi

displayName: rest api pipeline

steps:

- ${{ each user in parameters.users }}:

- task: PowerShell@2

inputs:

targetType: 'inline'

script: |

Write-Host "${{user}}"

When user submits the form

then the power app will run

and as a result the azure devops pipeline will be triggered through the rest api.

Finally the pipeline will parse the parameters provided by the form.