When you build your application with cloud native technologies you will build microservices on containers instead of monolithic applications. We will now examine how easy is to build a .NET application in a container and run this application on your local machine.

First we will need to create the visual studio solution. I will go through that with visual studio IDE and then I will use vs code. For my microservice I am using a ASP .NET Core web api with default code.

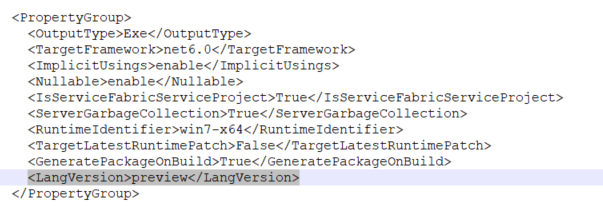

The target framework for the solution will be the latest .NET framework which is version 7. All other settings will be set to defaults.

When you run the app locally with IIsExpress you will be able to access the swagger interface through the port which you defined in the launchSettings.json.

https://localhost:7057/swagger/index.html

This file can be located under Properties and there you can configure on which port the application will run. In the profiles section under https settings, you can find the default application URL and port. This will be needed in later steps.

Microsoft provides the below documentation in order to create a containerized application that runs on .NET

Build and run an ASP.NET Core app in a container

In this guide you will learn how to: Create a Dockerfile file describing a simple .NET Core service container. Build…code.visualstudio.com

In order to create a microservice based on our vs solution we will need a dockerfile. This can be created automatically with vs code.

In vs code command dialog search for docker add and select docker compose files to workspace.

Then select asp net core.

and after that your operating system. The next step will be to select the exposed port, or otherwise under which port your application will run. There we should provide the port that we found under our launchSettings.json or the one that we configured manually. In my case I will select the default one for the solution which was 7057.

When a popup window appears on the screen you should select add Dockerfile and automatically the build files will be generated.

Dockerfile

Based on my setup I altered two things in the generated Dockerfile. The first thing will be to change configuration to Debug instead of Release. For production environments you will consider using the release build directive. The second thing will be to add an environmental variable ASPNETCORE_ENVIRONMENT inside the container with the value Development.

FROM mcr.microsoft.com/dotnet/aspnet:7.0 AS base

WORKDIR /app

EXPOSE 7057

ENV ASPNETCORE_URLS=http://*:7057

ENV ASPNETCORE_ENVIRONMENT=Development

FROM mcr.microsoft.com/dotnet/sdk:7.0 AS build

WORKDIR /src

COPY ["AspNetWebApi.csproj", "./"]

RUN dotnet restore "AspNetWebApi.csproj"

COPY . .

WORKDIR "/src/."

RUN dotnet build "AspNetWebApi.csproj" -c Debug -o /app/build

FROM build AS publish

RUN dotnet publish "AspNetWebApi.csproj" -c Debug -o /app/publish /p:UseAppHost=false

FROM base AS final

WORKDIR /app

COPY --from=publish /app/publish .

ENTRYPOINT ["dotnet", "AspNetWebApi.dll"]

docker build command

after the build is completed and the image is created you can run a new container locally.

Keep in mind that in order to test your container you should create a port forward from the container to your host. I used the same port for the host so I added the -p 7057:7057

The logs of the container indicate a successful run of the application.

Our application now runs as a microservice container inside the host machine (my laptop).

We can verify the access to our application using the URL with the swagger.

Youtube video: